How effective is your psychologist ?

In 1956 an English psychology professor named Hans Eysenck threw the cat amongst the pigeons amongst psychology circles by publishing an article that effectively stated “there is no evidence that psychological therapy works”. Eyenck’s analysis of the available data was both scathing, and unfortunately true.

As you can imagine, the profession greeted this news with the kind of welcome usually reserved for cholera epidemics, but his question pricked a very serious nerve in the psyche of psychological therapy practitioners, where was the evidence ? In the subsequent 50 years Psychologists have been remarkably effective not only at devising treatments that genuinely work to help people, but also in testing the effectiveness of these treatments in progressively more rigorous studies.

The gold standard of evidence in psychological treatment is what is called an efficacy trial. In one of these studies, a group of clients (typically around 100 or so) will be recruited. Half of them will randomly receive the intervention, while the other half will be given some kind of non-directive talking therapy. The groups will then be compared to see if the active treatment reduces symptoms more successfully that just talking alone. Only treatments that can be demonstrated to be better than talking alone are considered “evidence based therapies”.

As part of their training, psychologists learn about this suite of evidence based therapies, and learn how to use them with their clients. Therapies such as CBT, IPT, DBT, ACT and a variety of other three letter acronyms are the techniques typically associated with evidence based practice. The theory is that if psychologists use the approaches that have been shown to work in rigorous research studies, their clients will have the best chance of getting better.

Unfortunately, it is not quite that simple. In research studies, clients are carefully selected to only have one problem; depression, or anxiety, or an eating disorder, never both, and certainly never having drug and alcohol problems as well. In the real world clients arent so simple, often having several problems, all of which need to be taken into consideration when designing a treatment plan. Also, in research studies, the interventions themselves are often very carefully regulated to ensure that therapist A, says exactly that same as therapist B, C and D in each and every session. However, in the real world clients don’t like their therapists to follow scripts, and sometimes they want to talk about things that are concerning them today, rather than just talking about what the script tells them to talk about today.

So in practice, real world evidence based practice involves therapists taking bits and pieces from techniques that have been shown to work and then skilfully weaving those into a dialogue with clients that addresses both their here and now concerns, but also their longer term problems. The problem with this is that there is an enormous difference between how skilfully some therapists are able to weave together therapy. Real world studies of effect size tells us that some psychologists are around 3 times more effective than others.

In reality, evidence based practice might be about making sure that you are using the best techniques in therapy, but practice based evidence means that you need to ensure that your work as a therapist is meeting the standards expected.

This practice based evidence idea is part of the central philosophy of Benchmark Psychology. All of our psychologists have agreed to collect outcome data for all of their clients all of the time. This means that we can actually look back over the year and give ourselves a report card on our performance. In the interests of transparency, we would like to share that report card with you.

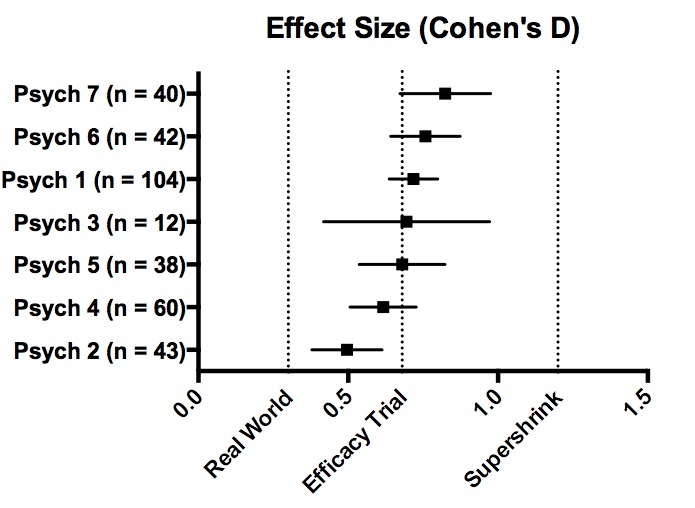

When we talk about the effectiveness of psychological therapy, we often use a statistic called Cohen’s D. D is a measure of effect size, and helps us to compare how much change we are making in reducing symptoms. The larger the Cohen’s D the larger the effect size. Efficacy studies, with their single problem client groups, typically achieve a Cohen’s D for therapy of D = 0.7. A large real world trial in the states of employee assistance programs and health management organisations achieved a Cohen’s D of 0.2.

When we calculated our effect sizes, we expected to achieve results somewhere between these two figures. We expected that we would do better than some of the very profit driven treatments offered by American EAPs and HMOs, but our expectation was that it would be quite difficult to achieve better results that efficacy research trials.

As you can see from the chart below we were correct in thinking we could do better than the American real world example, but incorrect in thinking that we would be unable to exceed the research trial effect sizes. Nearly all of our psychologists achieved results that were better than efficacy trial effect sizes, and this is tremendously re-assuring that we are on the right track. The question is what next ?

I have recently found some data from a small study of so called “Super-shrinks”, these are therapists who have exceptional results amongst their peers. Some of them are reporting Cohen’s D effect sizes of D = 1.5. This gives us something to aim for going forward.

Thank you to all of our psychologists for allowing us to share this data, but most importantly, thank you to all the clients who have trusted us enough to place their recovery in our hands this year.